My laptop is faster than your Elastic cluster

Posted on March 30, 2024 • 4 minutes • 787 words

Last week David Kemp of REA Group made a presentation on how realestate.com.au is coping with a large amount of daily notifications for new properties published on their website. He mentioned the stats of about existing 2M-3M notification requests (called “saved searches”) and about 2K-3K new properties being listed daily. Their implementation relies on the percolate queries feature of Elastic .

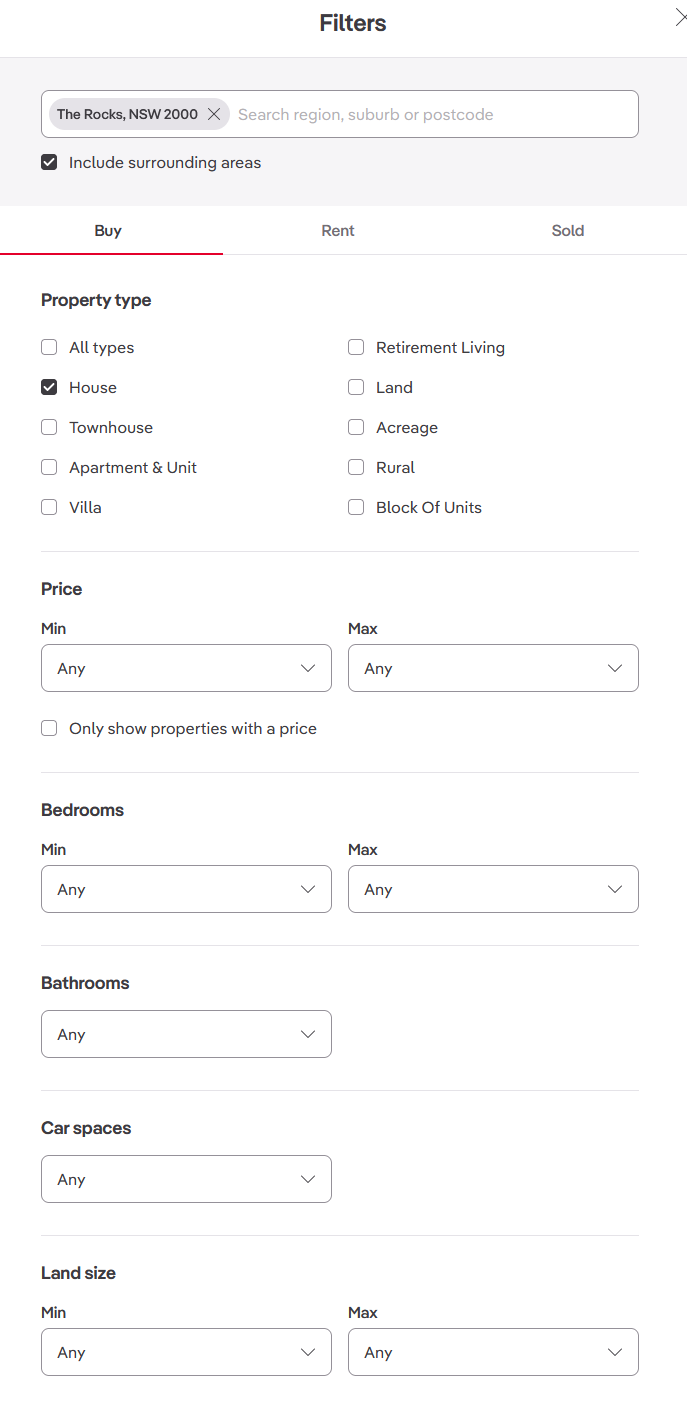

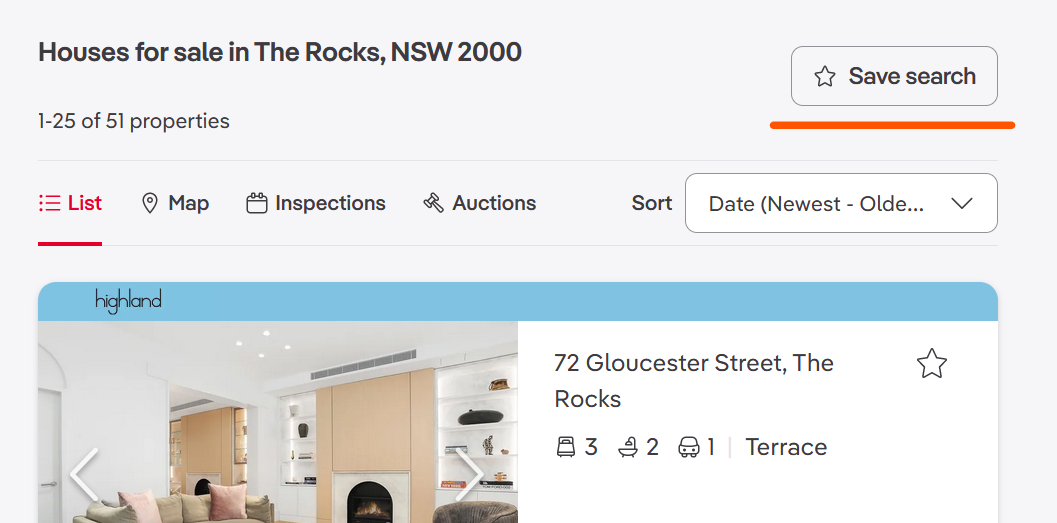

The name “saved searches” originates in the way users subscribe to the notifications:

- They search for a rental or sale property at a specific location, let’s say, ‘Sydney’ or ‘Alice Springs’, optionally indicate the property type and its features like bedrooms and land size

- After the search is performed, they can press the “Save search” button to get daily emails with new properties matching their current search.

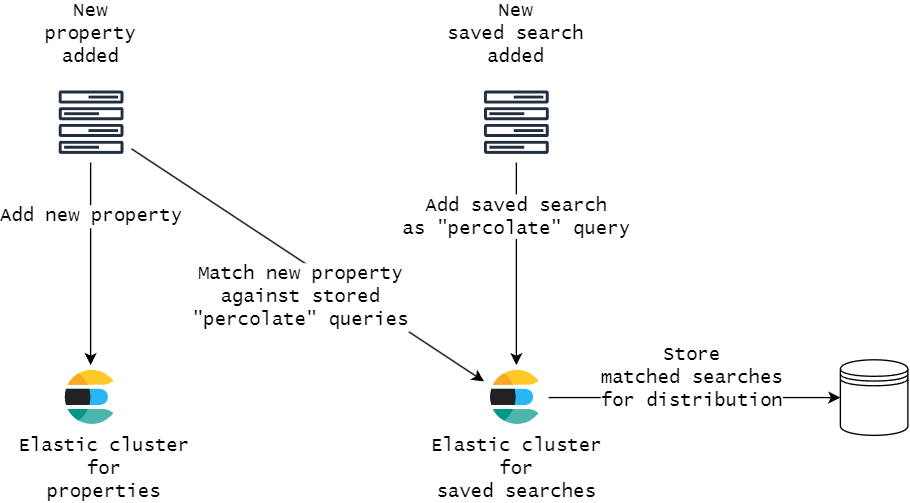

REA engineers run separate Elastic clusters: one for storing properties and another for storing and matching saved searches. A simplified architecture of this solution looks like this:

The properties get added throughout a day, matched with the saved searches on the second cluster, and the matching searches get stored in a database for later distribution via email or mobile notifications. Only 20 properties will be included into a notification/email to prevent scraping the listings.

A small Elastic cluster needs at least 3 nodes (usually, 4 or more for better data sharding). These nodes need at least 32 GB of RAM each, as a rule.

I got curious how well a single laptop can handle this task. Can it do that within a tolerable amount of time, let’s say, within 10-15 minutes if I use crude search techniques? After all, linear search of \(n\) elements among \(m\) elements has complexity of \(O(n*m)\).

Disclaimer: I am not challenging the approach the REA folks have taken. My goal is to estimate how quickly a similar (not the same!) problem can be solved with some basic code. I am sure David and his team have had more constraints and requirements than my code covers.

Technologies used: .NET 8 and a junior developer called ChatGPT/Copilot.

I put a limit of a few hours (ideally, no more than 3 hours) for the whole thing. This means there was time only for basic optimizations.

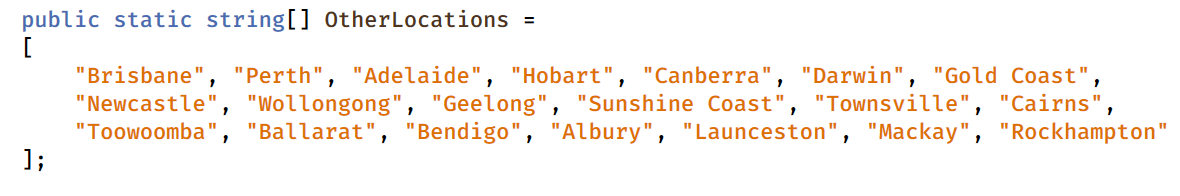

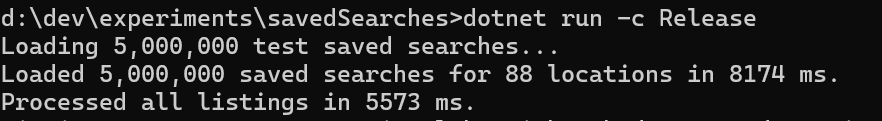

The assumptions: there are 5M saved searches, 5K new properties listed, 83 different suburbs in Australia used. My goal was to simulate larger number of properties and matches in capital cities (sorry, Brisbane!)

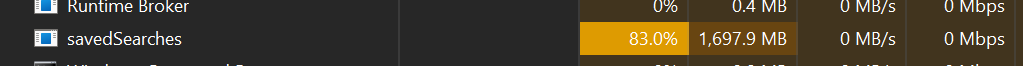

The idea is simple: we load all saved searches and all new properties into RAM and then match the properties to all searches. A rough calculation has shown that 8 GB of RAM should be enough for this task.

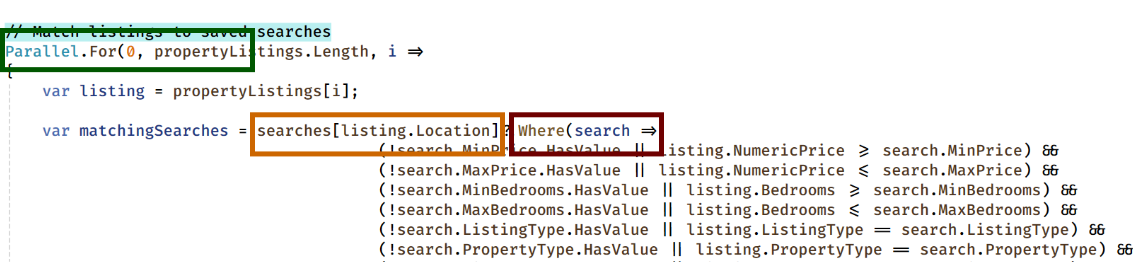

The optimizations: first, match the location and then run a linear search through the saved searches. Second, run the linear searches on all CPU cores as this is an embarrassingly parallel task .

Using only 88 suburbs makes my code slower: the linear search has to go through 5,000,000/88 = 56,818 saves searches for each property. In reality, searches are distributed across much larger number of suburbs (15,353 suburbs and localities according to Australian Bureau of Statistics).

After running the first version of the code, I spent time looking for a bug that prevented running the search over all the records. Apparently, there was no bug - just modern computers are really fast:

A battery-powered 3-year-old laptop (Microsoft Surface Laptop 4 with AMD Ryzen 7 and 16 GB of RAM) matches all 5,000 properties in under 6 seconds.

What about memory usage? I haven’t seen the process to go over 2GB of RAM:

With this kind of performance, there is no need to store the results in a database. Notifications can be sent from the same machine using the results from RAM. If notifications run on a separate cluster, the results can be served via API from a memory cache.

Besides, there is no need in a permanently running cluster. A task of this magnitude can be executed on a VM spun every day for 15 minutes for sending out all notifications.

Interestingly, all 5M saved searches fit into a 200 MB SQLite database. Surely, I didn’t add any indices or additional filters on searches but they won’t result in more than 20x size inflation (4 GB).

All code is available in this GitHub repo: https://github.com/haybatov/savedSearches/ .

The computers are so fast and have so much memory nowadays that many processing tasks become trivial and unexpectedly fast. Next time when you think about spinning another database cluster, give a simple script a try - you might be pleasantly surprised.

And my thanks to David Kemp for the interesting talk. It is worth watching when the video is out. He has covered more material than I could mention in this post.